13 The Anthropocene

Among the most basic but overlooked narratives of human history is the relationship between humans and the rest of the natural environment. More recently, social scientists and historians of the natural world have described the historical epoch wherein humans emerged and began to have a distinct impact on the natural world as the “Anthropocene.” The Anthropocene is a geological age. Yet, it can also be thought of as the period when human activity began to have a direct impact on the environment. There are various definitions for the start of the Anthropocene. We might start it during the agricultural revolution, some 12 to 15 thousand years ago. Alternatively, we might start the Anthropocene during the Industrial Revolution, when massive mining operations, plantation agriculture, and a dramatic increase in industrial waste had a dramatic impact on the environment. We might begin the Anthropocene in the 1950s, during the time when fallout from radionuclides from atomic bombs, first dropped in the middle of the 1950s, began to peak. However, there were also positive turns in the 1960s, when the Partial Nuclear Test Ban Treaty (1963) was an early success of showing how international agreements could also lead to positive environmental impacts. Similarly, legislation mandating clean air, water, and recycling has all proved to have positive environmental impacts. Nonetheless, the debate over how to best mitigate the negative human impacts on the environment continues in the present.

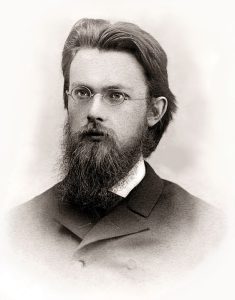

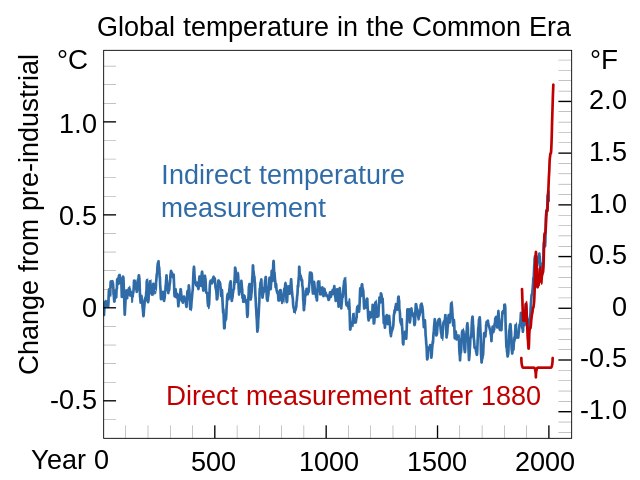

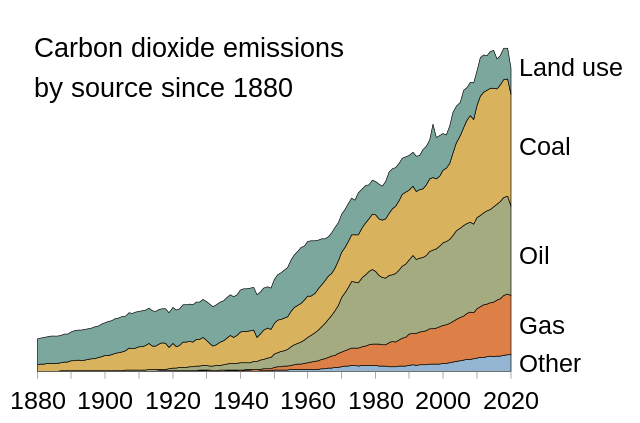

The concept of the Anthropocene has been around in various forms since the beginning of the 20th century. The Soviet scientist Vladimir Vernadsky wrote of the “Noosphere” as an era of “scientific thought as a geological force” as early as 1938. By the 1970s, climatologists had reached a consensus that CO2 (carbon dioxide) was changing global average temperatures. However, there was also a form of pollutants from aerosols that had the inverse impact of cooling temperatures, and it was unclear which pollutant process would have a more dramatic impact on the larger dynamics of “climate change” until the 1980s. Although the term “global warming” was thus popularized first, by the 1980s and 1990s, “climate change” later became more popular in the 21st century, in part because the anthropogenic forces impacting the environment do not just include dramatic increases in the emission of greenhouse gases, carbon dioxide (CO2), and methane. Climate change also includes the previously mentioned aerosol pollutants that caused the infamous hole in the ozone layer over Antarctica. Climate change also includes the destruction of habitats in mountains, coral reefs, and the Arctic—threatening mass extinction events—as well as the ever-increasing destruction of forest fires, hurricanes and typhoons, and other forms of environmental destruction.

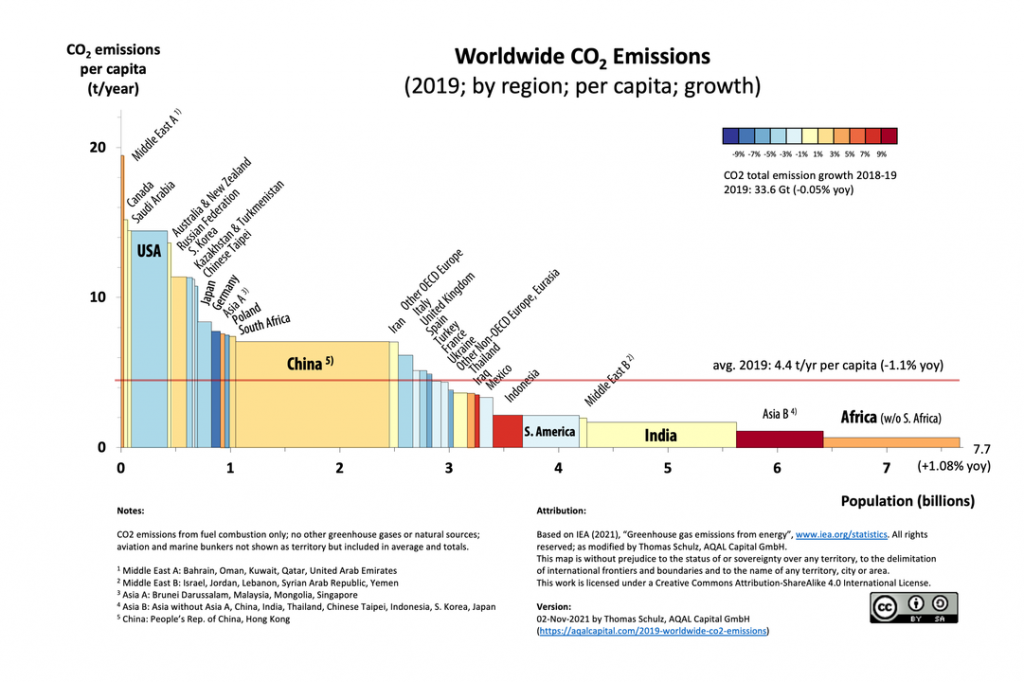

It is important to understand that the root causes of climate change are consumption based, not population based. Per capita, the largest polluting economies in the world according to the IPCC in 2021 were Qatar, Kuwait, Saudi Arabia, Canada, and the United States, in that order. Notably, these top five polluters all have significant petrochemical (oil) sectors in their economies. Additionally, all have significant shipping industries, per capita, that are major contributors to pollution. Where was a major populated country like the People’s Republic of China in the report, you might ask? Well, the world’s largest producing economy was ranked number seven, just behind Germany, which most likely ranks so high on the list because of the impact of the German automobile industry. When we remove the “per population” weight to the data, the United States shockingly jumps from number five on the list to number two on the list. Of course, while the PRC and India also rank number one and three on the list, when we remove the population weight, they appear where most might expect two countries containing a total of two-thirds of the human population on the earth to rank. Yet, the evidence from the United States and the per-population ranked data shows that the greatest impact on the environment is not situated in countries with high population. Indeed, a report in the first years of the 21st century revealed that if we ranked the Department of Defense separately from the rest of the United States, the DOD would rank between Iraq and Sweden—that is, in the mid-’30s—of the most polluting countries in the world.

Despite all the evidence that the mark of the Anthropocene is a mark of consumption of extremely wealthy individuals and nations, contemporary debates occasionally resurface disproven concerns about the impact of “overpopulation.” The vast majority of contemporary researchers believe that overpopulation is not a major concern. Nonetheless, we can show that the position that falsely argues that it is has historical roots in the work of an English economist named Thomas Robert Malthus (1766–1834). Malthus published a short piece, An Essay on the Principle of Population, in 1798. The book was read widely, including by the plantation slave owner and future U.S. president Thomas Jefferson, who sent a copy to his favorite economist, the French liberal economist Jean-Baptiste Say. Say would go to extensively criticize the work of Malthus, as Malthus would his. Say’s law held that production of markets would meet the demand of another market by simply supplying the new goods. However, Malthus held that markets were limited by the ability of the population base to grow. Malthus believed that when the means for population increase were present, the population would increase. However, population was also inherently limited by subsistence, and thus, if there was “overpopulation,” the means of subsistence would be stretched too thin, and society would collapse. In other words, population would theoretically grow as long as there was food, water, space, and so forth. However, when these limitations were exhausted, the population would collapse under famine, disease, and war. The most immediate critics of Malthus’s theories critiqued the work from a religious perspective. They argued that it was a soft form of advocacy of birth control and contradicted the notion of going forth, being fruitful, and multiplying, as it were. However, a close reading of Malthus’s work would reveal he actually preferred postponement of marriage and celibacy as “preventative checks” on population.

Malthusianism was revived during the 20th century under the ideas of neo-Malthusianism. Critics of the infamous one-child policy in the People’s Republic of China referred to the policy as “Malthusian” or “Neo-Malthusian” to suggest that it misplaced the blame in the attempt to address resources in the PRC that were stretched thin. Introduced in 1980 by Deng Xiaoping, Chen Yun, and Li Xianian, the “one-child policy” held that families should only have one child and reduce birth rates. Strict enforcement of the policy in some regions of the PRC in the 1980s was widely internationally criticized, most especially in the United States. However, the policy had wide exceptions that are often overlooked: minority communities whose religions and traditions included support structures for larger families were exempt. Nonetheless, the birth rate in the PRC increased after the implementation of the policy and did not begin to steadily decrease until there were other factors decreasing the birth rate. Indeed, as the economy of the PRC developed, by the 1990s, the birth rate steadily declined, following the pattern of much of North America and Europe. In other words, it was not the one-child policy that had an impact on population growth but rather the eradication of poverty, the dramatic increase in the education of women, and the increased ability for women to choose whether they had children or not that led to a decline in birth rates.

In Europe and the United States, neo-Malthusianism was revived in the post–World War II climate of the so-called Baby Boom. As birth rates shot upward, concerns of population stress were widespread. In 1968, Stanford University biology professor Paul R. Ehrlich published a sensational book called The Population Bomb, which was an instant bestseller. It began with the statement, “The battle to feed all of humanity is over. In the 1970s, hundreds of millions of people will starve to death in spite of any crash programs embarked upon now. At this late date, nothing can prevent a substantial increase in the world death rate.” Ehrlich became an instant celebrity and publicized his theories of social collapse on popular media like Johnny Carson’s Tonight Show. Ehrlich’s scenarios inspired some great dystopian science fiction, but luckily they did not happen in the way Ehrlich had predicted. Actually, a couple hundred million people did die of hunger in the decades after The Population Bomb was published. It’s hard to tell exactly because when the United Nations reported worldwide deaths in the 1970s and 1980s, it specifically omitted deaths from hunger. But these deaths did not reduce world population overall or result in the social chaos that Ehrlich had prophesied.

The Population Bomb may have done more harm than good in the long run by making worry over the population issue an easy target for critics. But people remained concerned about the rapid increase of the world’s human population. The Club of Rome was founded by the Accademia dei Lincei in Rome, Italy, in 1968 and consisted of many former heads of state, U.N. administrators, and high-level politicians. Their concerns were released in the 1972 publication The Limits to Growth. The Limits to Growth relied on computer modeling to show that resources were declining as population increased and pollution increased. However, at a point, without substantial changes in resource consumption, “the most probable result will be a rather sudden and uncontrollable decline in both population and industrial capacity.” The main criticism of the report’s initial premise that holds today is that it misplaces the problem of consumption. The main driver of resource consumption is not population growth so much as it is particular types of consumption, a point that is ironically revealed by the publication’s conclusion.

A main criticism of Malthusian and neo-Malthusian thought is that it has been used to argue that resources should not be given to impoverished populations. That argument holds that if resources were given to impoverished populations, the populations would simply explode and demand more resources, eventually causing a collapse of starvation, disease, and wars—that is, the argument was that we cannot increase wages of workers because they will spend more on food, be better fed, and then have larger families. However, the assumptions of Malthusians and neo-Malthusians, along with many British and American upper-class elites who argued this was the case, were totally incorrect. The eradication of poverty in the PRC, which we have previously discussed, has led to a continued decline in birth rates. The same is true in Brazil, the United States, and every other substantial example we have access to. As social welfare support for working populations increases, economic security increases. A new counterargument among neo-Malthusians has held that there is a necessity to therefore increase birth rates to meet labor supply shortages. However, again, the argument misplaces the problem. Based on the evidence of the past several decades, labor supply shortages are actually caused not by a lack of supply of overall laborers but rather by improper compensation in key sectors, especially the agricultural sector.

You can view Save the planet or save the people? on YouTube by clicking this link: https://youtu.be/2HKBC4MjUsI or by watching the embedded video below:

Another assumption behind Malthusian and neo-Malthusian positions has been that food production would increase more slowly than population. Of course, the history of the world during the 19th and 20th centuries proves this is not the case. The Third Agricultural Revolution, also known as the “Green Revolution,” of the 1950s and 1960s brought on new technological influences, the adoption of the scientific method to agricultural production, the use of high-yield seeds, the improved use of chemical fertilizers, and the use of mechanical machinery to dramatically increase global crop output worldwide. The Green Revolution unquestionably alleviated hunger for millions, reduced poverty, decreased infant mortality, and reduced potential greenhouse gas emissions. However, the consolidation of farms into large holdings and the continued use of certain types of chemical and animal fertilizers have had dramatic negative impacts on the environment.

Industrialized agricultural production has widespread economic liabilities, particularly through the continued reliance on fossil fuels. Fertilizers, insecticides, herbicides, and animal waste dramatically pollute freshwater supplies and have led to the deterioration of living conditions in rural areas. Environmental scientists still debate the extent to which Liebig’s law (named for biologist Justusvon Liebig) is a key limitation in the study of ecology. Liebig’s law states that growth is dictated by the scarcest resource—or limiting factor—and not by the total resources available. What studies of population and agricultural production often fail to consider is that the limiting factor is, once again, not the simple demand for food and water but rather the waste of a very small portion of the human population that produces an enormous amount of waste, in some cases rendering entire ecosystems unusable and destroyed.

Questions for Discussion

- Why did Malthus worry that overpopulation would lead to chaos?

- What has prevented a Malthusian catastrophe so far?

- Why do you think Melinda Gates felt she needed to set the record straight on population?

Until very recently, the Earth’s environment has appeared so vast that many societies, but not all, viewed resources as not particularly finite. The growth-oriented mantras of capitalist-oriented economies have been particularly driven toward the consumption of resources. For example, during the spread of capitalist-oriented markets to British India in the 19th and 20th centuries, a dramatic increase in the wealth of upper-class elite South Asians, British Asians, and British citizens living in South Asia had access to plenty of resources. Yet, the general population still suffered under regular famines. It was only after the British left that an independent India began to develop systems to alleviate the famines. Food was provided through public distribution systems at discounted rates, especially advocated as a necessary policy by “democratic socialists” such as then prime minister Jawaharlal Nehru. During the Green Revolution in India, food production also dramatically increased, being heavily subsidized under Nehru’s understandings of socialist development economics.

Nehru’s understanding of agrarian reform had been rooted in a study in 1948 by the state government of Uttar Pradesh. By promoting high-yield seeds, green manure, and other sustainable practices, they had increased yields by 15% to 30%. The success had been primarily a technical success. Nonetheless, part of the impediment to such successes was the degree to which large-holder plots would adopt these practices. Democratic socialists in India, along with supporters of social democracy, revolutionary socialists, and communists, all supported breaking up large-holder plots for additional social reasons, aiming to alleviate the poverty of Indian peasant farmers. However, despite Nehru’s powerful position, the democratic socialists in India were not able to gain enough political support to break up the landholdings of large-holder plots, which proved an impediment to the long-term stabilization of agricultural production. Much of the Green Revolution in India was carried out by Indira Gandhi, his daughter, when she was head of state in the 1970s. However, droughts remained a major impediment. Furthermore, the social climate of India’s politics shifted as criticism of Indira Gandhi mounted. Nonetheless, lessons from the 1979 to 1980 and 1987 West Bengal droughts would bright to light several similar concerns: the need to properly compensate agricultural workers and to make working in the agricultural sector desirable, the need for watershed planning to improve water supply, and the need to foster a social climate of ecologically oriented development.

Ecologically oriented development has been practiced worldwide, with mixed results. In the People’s Republic of China, there has been an ongoing project to aim to stop the expansion of the Gobi Desert since 1978. Termed “the Great Green Wall” in English—but officially, the “Three-North Shelter Forest Program”—the Chinese government has directed the planting of strips of trees near the edge of the desert. The forest strips are designed to act as windbreakers and thus prevent the sand from washing out the growth around the edges of the desert. The project also includes the planting of shrubs and the aerial seeding of grasses around the forest plots once they are completed. Gravel is also laid around the forest plots to hold off the incursion of sand and then encourage soil formation. The fourth phase of the project began in 2003. However, official governmental efforts have also been aided by the work of individual activists, such as Yin Yuzhen.

Yin Yuzhen began working in 1985 by experimenting with botany in her yard. She developed methods to fight soil erosion with planting and began to investigate reforestation. By 2005, she had been recognized as a laomo (model worker) by the local Communist Party secretary. She had initially planted willows and poplar trees. She found fast-growing pines could set down roots quickly and last for thousands of years. The resulting program has attempted to follow her model, planting vast numbers of fast-growing pines. As most students will know, monocropping has been a potentially deadly agricultural practice, perhaps most known for producing the European potato famine of the 19th century that permanently reduced the population of Ireland. However, some party officials in the PRC defended the monocropping of trees, arguing that rapid growth was necessary to offset carbon emissions quickly. Nonetheless, the majority of contemporary consensus has been to use fast-growing pines as an initial measure, which are then shored up by other types of trees, shrubs, and grasses to promote agricultural diversity. Currently, the latest update on the plan suggests it will last until 2050.

Questions for Discussion

- What monocultures do we depend on today that might make us vulnerable, similar to the Irish potato situation in the 19th century?

- Why did Paul Ehrlich’s book possibly do more harm than good?

- Do you think the possibility of social collapse, even if uncertain, is something we should study more closely?

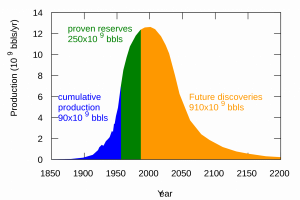

Not all warnings that resources have limits come from university-affiliated academics. Marion King Hubbert was an American geophysicist working for Shell Oil in 1956 when he presented a research paper to an oil industry trade group where he showed that there would be a peak in the possibility of oil production for the continental United States. He correctly predicted that continental oil production peaked in the U.S. between 1965 and 1970 before declining. However, he did not predict the dramatic increase in offshore oil production, conversion of tar sands, and expansion of fracking that occurred during the Obama presidency. Nonetheless, he did predict an overall peak in global oil production that occurred around 2003 and 2004. As a result, oil prices were expected to steadily rise. However, the introduction of new technologies such as fracking and the conversion of tar sands have added resources to what is considered “petroleum reserves,” thus also adding potential increases to global supplies. Nonetheless, the reality remains: even without the concerns of the negative environmental impacts, at current production and consumption rates, global oil reserves are expected to dramatically decline by 2050. That said, the environmental cost of consuming at current rates up through the coming decades is also immense.

Questions for Discussion

- What did Hubbert mean by an “exponential growth culture”?

Scientists already began to suggest that the global economy should shift away from fossil fuels in the 1970s and 1980s. Due to research on alternative sources of energy, from new forms of hydropower—harnessing either waves or tides instead of waterfalls—to solar and wind energy, these new forms of energy experienced rapid growth. However, oil industry analysts were often simply outright paid to create counterarguments, preventing their adoption. Nonetheless, political winds began to shift as the environmental movement grew. By the middle of the 1990s, bipartisan congressional reports in the United States agreed that climate change, including global warming, was a major issue of federal concern. However, many of these reports’ findings were obfuscated by the very same politicians when they spoke to the American public, leading to accusations that they had been paid off by various oil industry lobbyists and special interest groups. Internationally, the United Nations Framework Convention on Climate Change (UNFCC) was also formulated in the same context. Each year they have a formal Conference of the Parties (COP) given by the number of the year. COP 27 is scheduled to be held in Sharm El Sheikh, Egypt, in 2022.

In December 1997, 192 parties of the United Nations adopted the so-called Kyoto Protocol while meeting as part of the COP3 conference in Japan. The protocol, which went into force in 2005, aims to reduce the onset of global warming, a very specific and very disastrous type of climate change. To do so, the Kyoto Protocol calls on member states and massive consuming economies to reduce greenhouse gas concentrations in the atmosphere. The most well-known greenhouse gasses are certainly carbon dioxide (CO2), methane (CH4), and nitrous oxide (N2O). However, the Kyoto agreement also covers hydrofluorocarbons (HFCs), perfluorocarbons (PFCs), sulfur hexafluoride (SF6), and nitrogen trifluoride (NF3). HFCs and PFCs had been previously introduced as alternatives to chemicals that harmed the ozone layer. Thus, their introduction was widespread and rapid. However, their reduction would also have positive impacts on the potential rise of global average temperatures, per the Kyoto Protocol. Although the key impacted 36 developed economies that signed on to the protocol actually saw a reduction of projected emissions from the signing of the protocol through 2010, global emissions continued to rise. Thus, the “Doha Amendment” was added to the protocol in 2012, which extended the agreement to 2020. Importantly, Canada withdrew from the Kyoto Protocol in 2012, and the United States Congress never ratified the agreement. Thus, these two key contributors to global emissions were not bound by the negotiations. Bizarrely, the overwhelming argument among the Republicans—and a smaller portion of Democrats—in the American Congress who opposed ratification was that the United States should not ratify the protocol precisely because it was legally binding.

The American refusal to participate in climate change policy negotiations, especially during the Bush administration era, led to a collapse of international climate policy negotiations. An attempt to rebuild the agreements led to a reframing of the Kyoto Protocol as the Copenhagen Accord in 2009. However, these agreements were not legally binding and, thus, had no teeth. Nonetheless, the Copenhagen Accord, along with the Doha Amendment (2012), did lead toward an international attitude shift, wherein more and more world leaders were willing to negotiate on climate policy. Through negotiations before and during COP21 in Paris, in 2015, the Paris Agreement was signed. Negotiations in Paris occurred over the course of several weeks. A critical moment in the negotiations occurred when the American team realized that the word “should” had been replaced by the word “shall” in the legalese of the agreement. Because Obama administration officials viewed this term as too legally binding for the political landscape of the American Congress, some on the team threatened an American pullout. However, the negotiations were saved by French officials who offered an amendment to the wording, arguing it was simply a “typographical error.” Despite the fact that the Paris Agreement was non-binding, an uninformed American public considered it a major electoral issue. The Trump administration claimed promising to withdraw from the Paris Agreement was one of the reasons behind their electoral victory in 2016, and the Biden administration campaigned on rejoining the agreement in 2020. With Biden’s electoral victory, the U.S. was readmitted to the agreement in February 2021.

A contemporary key term of policy makers and activists who seek to address climate change is “loss and damage.” “Loss and damage” is recognized in the language of the Paris Agreement and refers to the consequences of extreme weather events. As the climate changes, hurricanes grow more frequent, less predictable, and more severe. Furthermore, forest fires become larger, more frequent, and more destructive. Additionally, beyond the severity of natural disasters, rising sea levels impact low-lying islands and most of the world’s major cities. Nonetheless, within the past decade, increasing recognition has suggested that even though the greatest contributors to climate change occur in the world’s developed economies, along with the Gulf States, India, and the PRC, the greatest impacts have already been experienced by developing economies or parts of developed nations that are less well recognized, such as the French overseas department of Martinique or the United States commonwealth of Puerto Rico. The Warsaw Mechanism (2013), established during the COP19 conference, also specifically categorizes loss and damage as a subset of adaptation. Officials of countries like the United States and the United Kingdom argued—especially during the Trump (U.S.) and Johnson (U.K.) administrations—that they should not be held responsible for the loss and damage that other countries experienced as a result of climate change. The Paris Agreement had included a US$100 billion fund to support developing countries. However, wealthy nations, especially France, Germany, the United Kingdom, Japan, and the United States, failed to produce the promised funds. Nonetheless, during COP26 (2021), it seems the attitude began to shift. Scotland, also the host of the conference, became the first country to contribute toward a global loss and damage fund.

The advantage to the concept of “loss and damage” from the perspective of international relations is that it does not narrowly define climate change. It simply suggests that the members of the international community should work together to mitigate the impacts of climate change for the sake of international economic stability and general human well-being. Nonetheless, it is worth clarifying certain elements of the debate on climate change, focusing on the history of carbon emissions. There are several aspects to carbon emissions that could be mitigated. For example, one of the largest contributors to carbon emissions is the consumption of fossil fuels. There is no question that switching from fossil fuels to other energy sources as quickly as possible and leaving as much of what remains of natural resources for fossil fuels in the Earth as possible would reduce emissions, as fossil fuels are the single greatest contributors to anthropogenic climate change. There is a counterargument that suggests that agriculture, forestry, and other land use contribute to a similar amount of carbon emissions in the United States (24% in 2010). However, that counterargument is predicated on the separation of fossil fuel consumption into at least four categories: electric and heat production (25%), industry (21%), transportation (14%), and buildings (4%). Since fossil fuels are the primary contributors in all of these categories, it is not a surprise that fossil fuels make up 78% of the carbon emissions in the United States, according to 2010 data. Nonetheless, it remains true that agribusiness and deforestation are significant contributors to carbon emissions and therefore climate change.

But because energy is such a large part of the economies of developed nations, any change is heavily contested. Global energy corporations have an incredible ability to influence politics. A few years ago, BP (British Petroleum, established in 1908 as the Anglo-Persian Oil Company and now operating in 72 countries) issued an Energy Outlook report for the year 2035. BP claimed that Hubbert’s peak oil scenario was actually incorrect and announced the company’s intention to burn just as much as possible over the next two decades. BP’s claim that oil production hasn’t peaked, however, depended on redefining the word “oil” to include both tar sands and biofuels such as ethanol. Ethanol production depends not only on the energy-intensive production of surplus corn and cane sugar (used in Brazil as the primary plant source) but also on government subsidies that keep the prices of these commodities below their cost of production. So it’s hard to see how biofuels could legitimately be called a new source of “oil.”

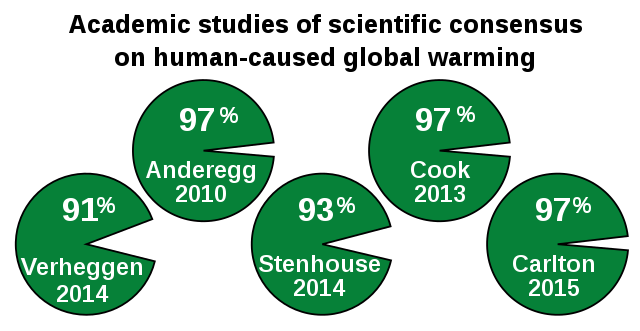

Climate change, more than any other environmental concern, has dominated the attention of Americans in recent years (and has, in many cases, pushed pollution off the table, which is unfortunate). Although the idea that the planet’s climate has been adversely affected by human activity is very controversial in the media, politics, and popular culture, it is almost universally accepted by scientists. According to NASA, at least 97% of climate scientists agree that global warming over the past couple of centuries is due to human activities, or anthropogenic. American and international science organizations like the American Geophysical Union, the American Meteorological Society, and the American Medical Association, in addition to the Intergovernmental Panel on Climate Change, have all gone a step further, agreeing with the American Physical Society, “We must reduce emissions of greenhouse gases beginning now.”

Of course, who they mean by “we” is unclear, and the penalties for heeding their warnings are really hard to specify. We often hear that “the forecasts show that it is China, India, and the other emerging economies that are increasing their carbon dioxide emissions at a speed that will cause dangerous climate change. In fact, China already emits more CO2 than the USA, and India already emits more than Germany.” This objection came from a European Union environment minister speaking at the World Economic Forum at Davos in 2007. The population of China is 1.386 billion. India is 1.339 billion, the U.S. is 327 million, and Germany is 82.79 million. That means that although China emits more total CO2 than the U.S., on a per-person basis, we still emit twice as much (15.7 tons per person to China’s 7.7 tons).

Similarly, on a per capita basis, Germany emits more (9.7 tons) than China and five times more than India (1.8 tons per capita). While it’s important for China and India to get their carbon emissions under control, it is unacceptable for Europe and America to try to shift the blame when we still outproduce them on a per capita basis. Especially since most of the carbon now in the atmosphere was put there by us.

Questions for Discussion

- Why is atmospheric carbon a global rather than a regional or national problem?

- Why is it misleading to compare current total carbon emissions between countries in trying to assess “blame”?

You can view the trailer for An Inconvenient Truth (2006) on YouTube by clicking this link: https://youtu.be/Bu6SE5TYrCM or by watching the embedded video below:

Climate science is complicated, and it can be difficult for some people to understand. To make matters worse, many Americans share a belief system that distrusts science and scientists, in part because science seems to contradict their most cherished religious doctrines about the nature of the world and their place in it. To make matters even worse, concern over climate change has been identified with a particular political orientation, with people like former Vice President Al Gore seeming to suggest that if people aren’t alarmed by climate change, they’re monsters who don’t love their children. The claim that only liberals care about the environment is not only absurd; it ignores the traditional meaning of the word “conservative.” Climate change has not been a liberal versus conservative issue in most countries in the world during the past several decades. However, in addition to the negative reaction many people had to the tone of messages like the trailer above, the argument against recognizing climate change and doing something about it has also been carefully managed and funded by political action committees and foundations representing corporations that oppose changes in fossil fuel energy policy.

Although 97% of climate scientists agree on anthropogenic climate change, when Americans are asked by pollsters, “Do most scientists believe that the Earth is getting warmer because of human activity?” 55% say either “No” or that they don’t know. Less than half of Americans are aware that scientists are basically unanimous on this issue, and thinking that scientists are unsure affects their own opinions about climate change and the government policies they are willing to support to mitigate it. A recent study found that most of the public statements against climate change made from 2003 to 2010 could be traced to about 91 organizations that received $558,000,000 in funding during that period. From 2003 to 2007, this money was easily traceable to sources such as Exxon-Mobil and Koch Industries, two corporations opposed to any changes in energy policy.

With the changes in foundation funding that followed the 2008 Citizens United Supreme Court decision that allowed corporations to hide their political spending, the sources of money paying for climate change denial have been more difficult to trace. The ideological position of Koch Industries appears to be “free market” on the surface. However, the organization is deeply tied to the fossil fuel industry, especially since the Koch family received an enormous financial boost during World War II, at which point they sold Dutch oil to Nazi Germany in support of the fascist war effort. Koch Industries has since stifled market innovation in green energy. Climate change–denying lobbyists have warned of the “command economies” they claim environmentalists wish to impose, using language designed to rile up libertarians and free market enthusiasts and mobilize them against changing the economy in ways that, although they would be very bad for large fossil fuel industry corporations, would almost certainly create millions of new jobs.

A recent study found that conservative think tanks funded by energy corporations play a central role in “denying the reality and significance of anthropogenic global warming (AGW), especially by manufacturing uncertainty over climate science.” The conservative think tanks sponsored the publication of 108 books denying climate change through 2010. American think tanks have also recently begun funding publishing in foreign countries to spread their message.

The study also examined the credentials of the authors and editors of these climate change denial books and found that “an increasing portion of denial books are produced by individuals with no scientific training. It appears that at least 90% of denial books do not undergo peer review, allowing authors or editors to recycle scientifically unfounded claims that are then amplified by the conservative movement, media, and political elites.” Needless to say, such studies do not meet the rigor of appearing in college classrooms. Nonetheless, the political impact is substantial in terms of comparison between the American economy and other leading economies worldwide.

While Germany is becoming a world leader in solar energy deployment in spite of receiving only as much sunlight as Alaska, and while Britain is mapping its shift to a post-oil-based economy with its “transition towns,” Americans have wasted a substantial amount of time even as certain states have begun to move forward. For instance, small-holder farms that were in financial ruin as a result of the expansion of corporate agriculture in Upstate New York have begun to convert to solar farms. The vast majority of contemporary economists agree that there are currently more jobs in the renewable energy sector than in the fossil fuel sector, that this trend is going to increase, and that, overall, the economy would be better off if we shifted to sustainable energy sources and reduced carbon emissions. Of course, the petrochemical industry would be decimated, but not entirely lost, since even without fossil fuels, petrochemical products would not be entirely eliminated from consumption without finding other alternatives. Indeed, a shift toward solar energy could only ever be part of the solution to the vast number of cascading crises associated with dramatic climate change.

A shift toward solar energy could help parts of certain states in the United States, even where the petrochemical industry has been substantially influential. Industrially ravaged, overly polluted sites that cannot be used for anything else are called “superfund sites” under the designation of the Environmental Protection Agency, an agency established by the Republican Nixon administration in 1970. In the State of Vermont, a superfund site that was previously a location of copper mining was converted successfully into a solar farm in the early 20th century because solar farms can be built on superfund sites. With 27 superfund sites in the State of Louisiana as of this writing, many are ripe for potential conversion for use as either solar power facilities, potential tidal power facilities, or other uses.

States like Louisiana are also particularly vulnerable to climate change. The state is expected to simply get hotter, with both floods and droughts becoming more severe, while hurricanes also become less predictable and more severe. Furthermore, under recently revised estimates of 2050 sea levels, the ocean is expected to rise around 12 inches. Think of this in terms of a 30-year mortgage on a house. If you buy property today in Louisiana and that property is 11 inches above sea level, you can expect that property will be underwater, literally, by the end of the mortgage. In Lake Charles, Louisiana, when the city—which rarely gets hit by hurricanes, as it is in a western and more dry part of the state—was pummeled by two major storms in a single year, the Republican mayor, Nic Hunter, took to the pages of the national press, pleading for help and for action to address climate change.

The scientific consensus on climate change is clear. Of nearly 14,000 peer-reviewed climate articles published between 1991 and 2012, only 24 reject global warming. There really isn’t any doubt that the Earth’s climate is changing in ways that are going to cause serious social disruption in the future. When political leaders say there is still doubt about the need to address climate change, we should follow the money to discover why. Our unwillingness or inability to understand the situation causes us to continue being manipulated by organizations interested in maintaining the status quo and may mean that our choices in the future will be much more limited than they need to be.

Questions for Discussion

- How does politicizing the climate debate make it more difficult to find solutions?

- What motivates some organizations to resist the idea that humans have caused global warming?

- Would addressing climate change more rapidly hurt or help the global economy?

Media Attributions

-

1889-VernadskyVI-Paris © Bosch & Co. Paris is licensed under a Public Domain license

- Hubbert_peak_oil_plot.svg © Hankwang is licensed under a CC BY (Attribution) license

- Common_Era_Temperature.svg © Efbrazil is licensed under a CC BY-SA (Attribution ShareAlike) license

- CO2_Emissions_by_Source_Since_1880.svg © Efbrazil is licensed under a CC BY-SA (Attribution ShareAlike) license

- 2019_Worldwide_CO2_Emissions_(by_region,_per_capita),_variwide_chart © Tom.schulz is licensed under a CC BY-SA (Attribution ShareAlike) license

- Academic_studies_of_scientific_consensus_-_global_warming,_climate_change-en.svg © RCraig09 is licensed under a CC BY-SA (Attribution ShareAlike) license

- 20200327_Climate_change_deniers_cherry_picking_ time_periods © RCraig09 is licensed under a CC BY- SA (Attribution ShareAlike) license

- SoSie+SoSchiff_Ansicht © Andrewglaser is licensed under a CC BY-SA (Attribution ShareAlike) license